Handling Edge Cases in Machine Learning with Synthetic Data

Synthetic training data has become increasingly popular in computer vision due to its ability to overcome the challenges associated with collecting real-world data, particularly for edge cases or outliers. Edge cases can represent rare or challenging scenarios, and can be difficult to capture in real-world datasets, leading to models that are not robust to these conditions.

In this blog post, we will showcase a simple example of poor edge case handling in a face segmentation model trained on a public dataset of real images, and how synthetic data can be used to better consider the edge cases.

While a public dataset is often a good starting point for training any Machine Learning problem, these datasets are often designed for algorithm development and benchmarking of different algorithms. I.e. achieving good performance on these datasets is meant to be difficult!

As an example, in this public dataset, the validation and test sets are randomly selected from the full dataset. Although that may seem like a good strategy to guarantee that the training set well represents the distribution of test images, it also means that there will be some edge cases in the test set that falls just outside the distribution of the training set.

Left: Illustration of how some randomly selected samples (red) can fall outside of its original distribution (blue), once removed from it.

Center: A ground truth segmentation from the test set of a public dataset with a head rotation that has low representation in the corresponding training set.

Right: The prediction of the same sample (right) by a model trained on the training part of the same dataset, correct predictions are darkened to highlight the errors.

In recent years, a more data centric machine learning approach has gained more attention[1]. To the point that today's groundbreaking language models are basically trained on the publicly available part of the entire internet (according to ChatGPT itself).

If we in our example take a data centric approach and allow ourselves to improve the training dataset by using a wider distribution of training samples than we expect that our computer vision model will be exposed to, it should be better prepared to handle the edge cases.

Left: Random samples from a uniform distribution, spanning further than the outliers in the test set from the previous image.

Center: A synthetic training image generated at a wider viewing angle than any image in the public test set (and training set) to ensure coverage of the edge cases.

Right: Ground truth segmentation overlayed on the image.

When evaluating the accuracy of a computer vision model, it's crucial to not only rely on randomly selected test images but also to explore the limits of the model's performance. So you don’t only know how likely your model is to fail, but also when.

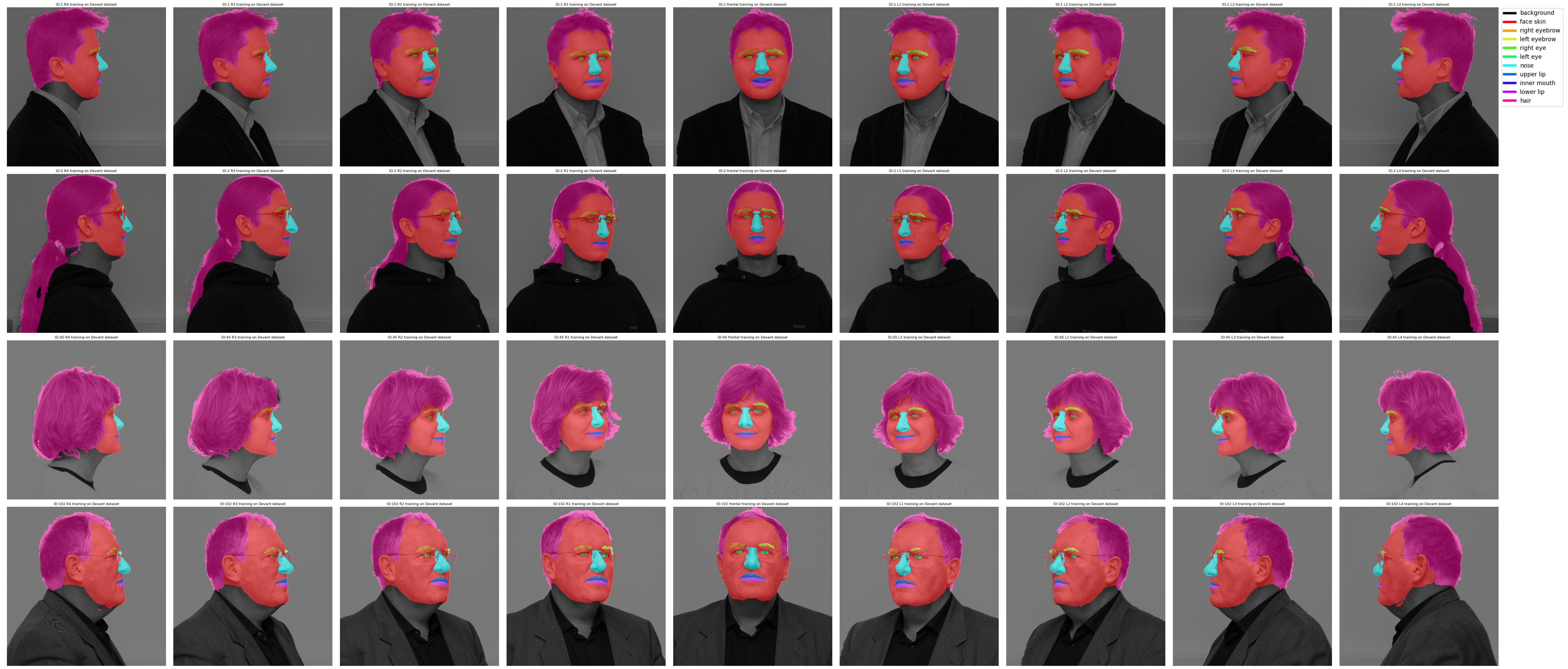

In our example, the SCface database[3] is particularly useful for this purpose as it contains a systematic variation of face angles from side to front to the other side. By evaluating the trained models on the SCface dataset, we can identify specific edge cases where the model struggles, and gain insights about the limitations.

Predictions by a Unet++[2] model trained on the training part of a public dataset (with very good results on its test set) and applied on the SCface mugshot images from -90° to +90° viewing angles.

By comparing the performance of models on the SCface dataset, we can gain a deeper understanding of their strengths and weaknesses and identify areas for improvement. These issues can then be addressed much faster with an iteration of synthetic data generation, rather than collecting and annotating real data. This systematic approach helps us push the boundaries of computer vision models and develop more robust and accurate solutions.

Predictions by a Unet++ model trained on a dataset of 200k in-house generated synthetic samples, with a wider range and uniform distribution of camera and head rotation angles, and applied on the SCface mugshot images.

The purpose of the test set in machine learning is to estimate real-world performance on new data, so it should ideally also reflect the real world’s distribution.

While it might seem like a good idea to add extra edge cases to the test set to ensure good outlier performance, actually ensuring it by iterating until the performance is good would exploit the test set and invalidate its real purpose. These cases would be better kept in a validation set, whose main purpose is to evaluate the model’s readiness without exposing the actual test set. For this, a synthetic validation dataset may be a good choice, as it allows for systematic variations of each parameter, so the performance can be measured over each variation individually.

The purpose of the training set however, is to achieve as good performance on unseen data as possible, in order to maximize the real-world performance. When creating your training data, don’t aim to reach the same distribution as your field of use — go wider!

[1] https://www.forbes.com/sites/gilpress/2021/06/16/andrew-ng-launches-a-campaign-for-data-centric-ai/

[2] Unet++: A nested u-net architecture for medical image segmentation

Z Zhou, MM Rahman Siddiquee, N Tajbakhsh, J Liang Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical …, 2018•Springer

[3] SCface - surveillance cameras face database, Mislav Grgic, Kresimir Delac, Sonja Grgic, Multimedia Tools and Applications Journal, Vol. 51, No. 3, February 2011, pp. 863-879, https://www.scface.org/